Coming back after Christmas Day, I got home and said, “Okay Google, switch on the lights”, as usual. And then I heard something along “Sorry, I’m not connected to the internet!”. Wait, what? Not that losing internet again surprised me, but *Why* should I stay in the dark when I have no internet connection? Well, because the AI used for voice recognition runs in the cloud, so no cloud, means no light! But couldn’t we do the same locally, without an internet connection? Well, we could indeed, and this is what we call Edge Artificial Intelligence or in short Edge AI. In this article, we will discuss how this technology can solve my problem and what it can offer us.

What is Edge Intelligence?

Knowing that the problem, with my lightbulb, comes from a loss of connectivity does not make it any less frustrating. But we have a fancy sounding solution: Edge AI.

For Real-Time applications, Edge Artificial Intelligence moves the AI closer to where it is really needed. In the device.

Instead of relying on a server in the cloud.

Before we move on, we should understand the basics of how machine learning works.

What is Edge ML ? Reminder on machine learning :

The two main phases of any machine-learning based solution are the Training and the Inference, which can be described as:

- The training is a phase where a (very) large amount of known data is given to a machine learning algorithm, for it to “Learn” (surprise) what to do. With this data, the algorithm can output a “model”, containing the results of its learning. This step is extremely demanding in processing power.

- The inference is using the learned model with new data to Infer what it should recognize. In our case, this would be interpreting what I wanted when I asked to turn on the lights.

The “known data” in the training phase, is called labeled data. This means that each piece of data (sound, image, …) has a tag, like a little sticker, attached describing what it is. A speech recognition AI is trained with thousands of hours of labeled voice data in order to extract the text from a spoken sentence. Natural language recognition can then be used to convert the text in commands, that a computer can understand.

Once trained, a model requires a fraction of the processing power to perform the inference phase. The main reason for that is that inference uses a single set of input data, while training typically requires a huge number of samples. The production model used for inference is also “frozen” (it cannot learn anymore) and may have less relevant features trimmed out, as well as being carefully optimized for the target environment. The net result is that it can run directly on an embedded device: The Edge. Doing this gives the decision power to the device, thus allowing it to be autonomous. That is Edge AI!

Hardware requirements of Edge Computing

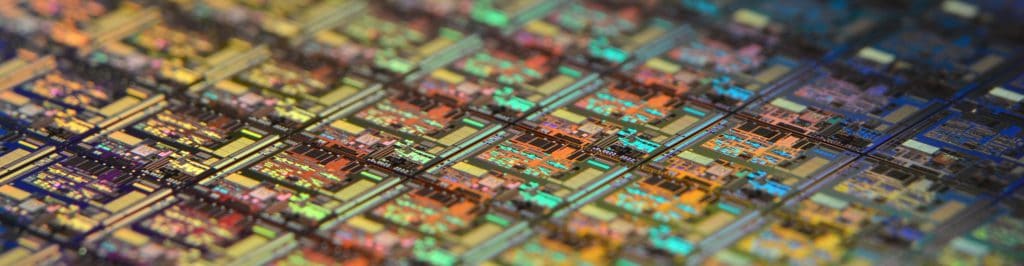

Like a lot of new concepts, the technology behind Edge Artificial Intelligence or Edge AI has been around for some time now: machine learning algorithms are common in computers and smartphones, where they work just fine. But what about embedded devices? Well, the tools and hardware are now coming together to form a solution that makes sense, namely thanks to:

- Increases in processing power of devices, and the availability of modules providing hardware acceleration for AI (GPUs and ASICs)

- Constant improvement of both AI models and their performance

- The quality of the tools and resources, that make the journey easier for data scientists, AI specialists, and developers

What this means is that we can now integrate Artificial Intelligence solutions not only in supercomputers, but in cars, smartphones, web pages, Wi-Fi routers, and even in home security systems.

Any Stakes of AI Inference on a device?

Yes, Edge Artificial Intelligence is first a matter of choosing the right hardware.

AI Inference on Edge devices can be implemented on several hardware pieces:

- CPU: On smartphones and embedded devices, any recent ARM CPU (Cortex-A7 and higher) are more than capable to handle it. It may not be the fastest or most efficient solution, but it is usually the easiest. TensorFlow Lite is commonly used, and provides key features from the large TensorFlow framework. You got great information about Accelerated Inference on ARM CPU on the Tensor Flow blog

- GPU: Out-of-the box support for GPUs will vary, but they typically provide a large throughput (i.MX6 Vivante, nVidia Jetson), allowing superior inference frequency and lower latency. It also removes a large workload from the CPU.You may a look on our post about Yocto for NVIDIA Jetson

- AI specialized hardware (ASICS, TPU): This is a fast growing category. These hardware pieces provide the most efficient AI solutions, but may prove expensive or harder to design.

Let’s dig a little into the last two solutions here below.

One possibility is to leverage the processing power and paralleling capabilities of GPUs. An AI is like a virtual brain with hundreds of neurons: it looks very complex but is actually made of a large number of simple elements (neurons for the brain). Well, GPUs were made for that! Simple independent operations applied to every single point (pixel or vertex) on your screen. Most machine learning frameworks (TensorFlow, Caffe, AML, …) are designed to take advantage of the right hardware when it is present. Nvidia boards are good candidates, but virtually any GPU can be leveraged.

Another solution is to integrate specialized hardware. Machine learning can be accelerated through custom hardware, and the 2 contenders are AI-specific chips, and AI ASICs (Application-Specific Integrated Circuit). And these are moving fast! The first version of Google’s Edge TPU (Tensor Processing Unit) is now available to a few lucky beta testers (including us!). ARM unveiled its Machine Learning and Object Detection processors, and Intel, Microsoft, and Amazon are working at their own solutions too. Right now, the best option is to have a GPU supported by the AI’s tools you are using. Google’s Edge TPU will be more widely available soon but is not yet production-ready, and having a custom ASIC is expensive to design and produce, so it is for now a priviledge enjoyed solely by large-scale specific products.

But beyond the ‘avant-garde’ factor, we know that technology in a product, and Edge Articifial Intelligence, should add value.

So here is a short list of Edge Artificial Intelligence advantages and disadvantages, to help you decide if this is the right solution for your devices.