Perhaps have you already heard of Edge IoT? And Edge Computing? If not, stay with me, and we’ll have a look at it together. If you have, you can head straight to the practical part in the article: “IoT Edge – Demo instead of words“. But in any case, together we will see how the connected objects of tomorrow will be much more intelligent and autonomous than those of today.

It all starts with the finding that full cloud has its limitations. Who can be satisfied that Siri takes 2 seconds to reply to any question? only works with 4G? and cannot even synchronize a contact from your agenda when you are off-line?

Edge computing creates new perspectives for IoT devices

First of all, what is Edge Computing? You will have noticed that everything nowadays is connected to the Internet. And some devices can’t do anything without it. The problem with this is networks end up overloaded with requests and data.

Result? Everything runs slower. Maybe it’s not very important when it’s just a user requesting a web page and they have to wait half a second for their favorite site to appear. But it becomes a lot more important when an automaton or an autonomous car is waiting for a response from a server to make an important decision: an emergency stop for example.

That’s where Edge Computing comes in: The goal is to get back to basics, whereby computers that constantly use the web ask themselves some questions using the data they collect from their sensors and make their own decisions.

This is the main principle around LoRa and SigFox networks where the bandwidth is limited. It is therefore essential to pass only important data.

Edge IoT means bringing some intelligence back into the device for increased efficiency

But now you will say: “If we already know how to do it, why is it new?” In fact, the big difference today is the type of algorithm that can make these decisions. Take the example of our autonomous car. This one is equipped with a front camera in order to know whether it will collide with an object, a person or another car.

If we are in “Fully connected” mode the situation is as follows:

- The camera takes a picture

- The on-board computer connects to the Internet, if it can

- It sends the photo to a server

- The server processes the photo

- The server returns the answer to the car

- The car analyzes the answer

- The car reacts

That’s a lot of steps and in the case of a car driving on a highway at 70mph you’d better hope that the 4G is working well. Especially given that many decisions have to be made in a short time. As you can see, time is of the essence. We already know that the delay of a classic HTTP response is 500ms, during which time a car engine can go through 3000 revolutions. So for reasons of safety and responsiveness, we move into “Edge Computing” mode and we embed the shape recognition server directly into the car:

- The camera takes the picture

- The on-board computer analyzes the photo

- The car reacts

That’s already better. But Edge Computing doesn’t mean that we are completely cut off from the Internet. It is important to be able to update the systems and continually improve the service. Our shape recognition server can be updated easily. But how do we do it while the car is running? And what if we don’t always have Internet connectivity? Not everywhere we go with our car is covered by the network.

That’s the main principle of Edge Computing. Combining connectivity and lack of connectivity without ever degrading the quality of service.

It will therefore be necessary to manage multiple instances of different devices all evolving in different configurations while maintaining consistency and improved service.

So by way of a little summary, what are the benefits of Edge Computing?

- Much lower cost of data transmission

- Decreased latency for the user

- Improvement in the quality of service

- Limitation or removal of a bottleneck (server side)

If we push Edge Computing as far as it can go, the idea would be that each car is completely independent of the network for the majority of the actions it has to perform in driving mode (obstacle detection, emergency stop…), but it can stay connected to the servers to receive updates and to send data to improve the algorithms of all other cars based on the experiences of each one.

All of this is now possible because embedded devices are becoming more powerful and can now compete with machines that once would have filled a room!

You can deploy edge computing on your devices with Azure Edge IoT

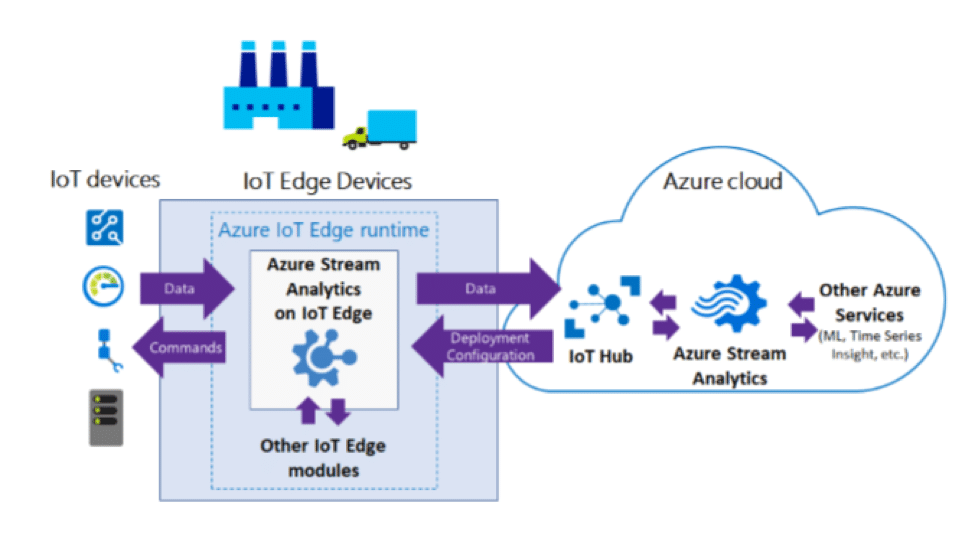

Now that we’ve spoken about Edge Computing and Edge IoT, let’s have a look at what Azure Edge IoT is. This is Microsoft’s solution to meet the demand of Edge Computing.

Using Azure Content, this service offers the ability to containerize the Azure cloud workloads such as “Cognitive Service”, “Machine Learning”, “Stream Analytics” and “Functions” to run locally on various devices such as a Raspberry Pi.

In short, Azure IoT Edge allows:

- Configuration of IoT software (Azure containers)

- Deployment on devices via secure connectors

- Monitoring of the fleet from the cloud (Azure portal)

Interesting, right?

If you prefer schemas, here is the one provided by Microsoft:

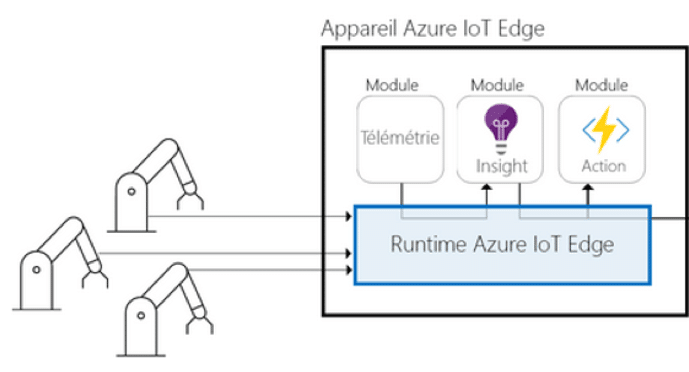

But how does it work on the device side? The device needs “IoT Edge Runtime” to be installed, which is provided by Microsoft. This will contain the “IoT Edge modules”, the containers housing the code to be executed.

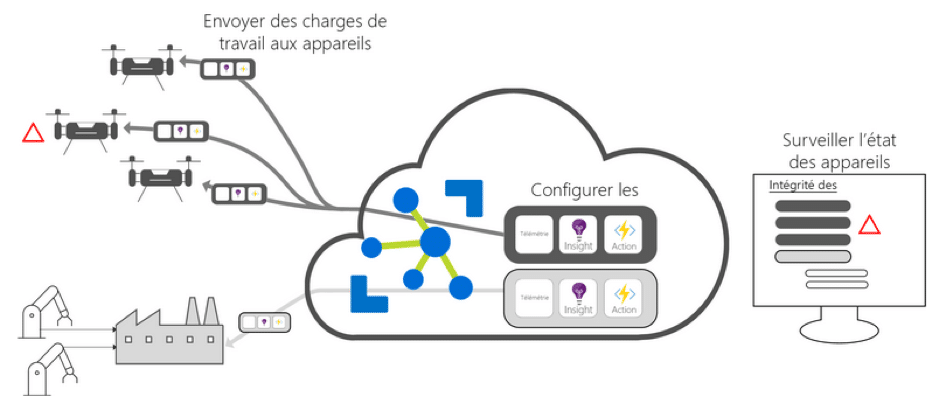

As we mentioned above, it is possible to monitor connected devices remotely from the web by updating their code and collecting information about their condition:

This is theory. If you want to experience edge IoT with a practical case, follow the link to my next article, a tutorial where I will show you how to make IoT Edge real with an autonomous robot.