One of the significant evolutions accelerated by the COVID-19 pandemic has been the rise of speech recognition technologies. In a world where touching surfaces might lead to an infection and sickness, it was no surprise that a capacity to interact with a device or machine with a voice instead of a finger became very attractive. While consumer voice recognition software emerged long before the pandemic and even though products like Apple’s Siri and Google Home, the pandemic accelerated adoption of the software and device makers turned to engineers to determine if their device could be operated by voice.

In the world of speech synthesis and speech recognition technology, a name that is increasingly well known is Vivoka. A French company founded in 2015, Vivoka specializes in speech technologies including speech recognition, voice synthesis, and voice biometrics. With more than 30 international partners and software that is available in more than 50 languages, the company truly lives up to its motto ‘Your voice has no limit’.

Witekio has worked closely with Vivoka and its flagship Voice Development Kit (VDK). The VDK is, in fact, an SDK or software development kit, combined with an intuitive graphical user interface specialized for voice use cases. This VDK allows any company and any developer to configure an offline voice solution based on Vivoka’s C/C++ code base. Witekio engineers have used Vivoka’s VDK to add speech recognition technologies to connected devices – here’s how they did it.

From Grammar to Recognition to Response

The first step to implementing speech recognition technology is to develop a specific grammar for the Vivoka software to interpret. While every human language has its own grammar, the grammar for the device has its own rules and structure.

For example, most devices have a specific wake up word that alerts the device that a command is incoming. Examples that are familiar to many include ‘Hey Siri’, ‘OK Google’ or ‘Alexa’. Along with the wake word, the grammar includes several keywords that when delivered constitute a command. For example, to turn on a light using Apple Home a user might say:

Hey Siri: Turn on the kitchen light.

[Wake word] [Action] [Device]

The grammar defines what speech is important and what is supplemental and safely ignored. For example, that same user might instead say:

Hey Siri: Can you please turn on the kitchen light so I can see what I’m doing here?

[Wake word] [Action] [Device]

The result is the same despite the user input being more complicated, and a little more polite.

The Vivoka software relies on the grammar to determine what to do with each command. While the VDK is available in more than 50 languages, it is important to note that each language demands its own reference grammar. While wake words might be consistent across different languages – Alexa is Alexa in both English and French, for example – the grammar in each case will be different and developers will need to program a separate grammar for each language use case.

Voice Recognition Technology in Action

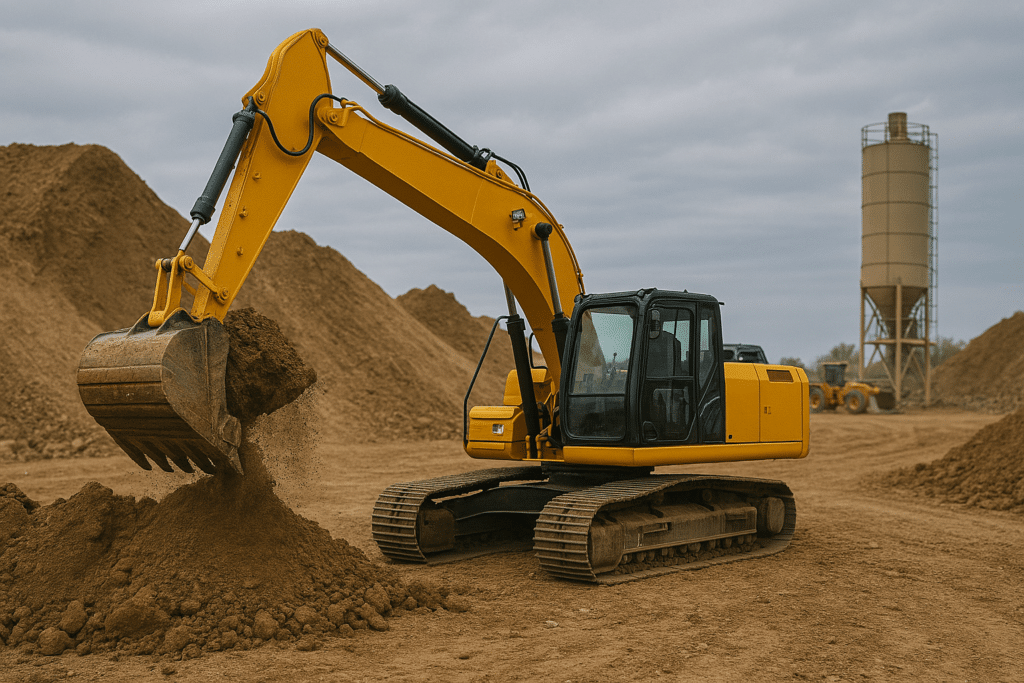

Recently Witekio put Vivoka’s speech recognition technology to the test by bringing its VDK and speech synthesis technology to a working model of a crane.

Witekio’s connected crane had previously been developed for the Consumer Electronics Show (CES) in Las Vegas but now, with the addition of voice recognition technology, a new use case could be proposed to potential customers.

The goal of the crane proof-of-concept project was to empower a crane operator to control the basic movements of the crane with only their voice, specifically:

- Turn: left, right, clockwise, counterclockwise

- Move: ascend, descend, lift, lower

- Shift: forward, backwards, fore, aft

Witekio developers began by programming a simple wake word before developing the more complex grammar that was required to move the crane. In addition, and making things a little more complex, the project called for the voice recognition to work in both English and German. As a result, two entirely separate grammars needed to be developed to achieve the same connected crane movements.

The Witekio team applied the Vivoka VDK using PulseAudio and an application coded in C++. They customized the interface and integrated the Vivoka software to send its response to a websocket connected to a Qt application. This application, as well, was developed to move the crane on receiving the instruction from the voice recognition software.

Don’t Forget the Hardware or the Environment

In action, the voice-controlled crane worked as planned. There were, though, a few areas that needed attention to ensure that the proof-of-concept could be deployed smoothly.

First was a hardware concern

Witekio engineers discovered quickly that the quality of the microphone attached to the crane and feeding the voice commands into the Vivoka software needed to control the crane. Low quality or unidirectional microphones would diminish the quality of the user experience and the capacity of the software to respond to the commands of the user. Higher quality microphones with omnidirectional capacity in the crane cabin would catch more of the voice commands and offer the best user interface.

Second was an environment concern

Cranes are generally located on building sites which can be loud places to detect voice commands effectively. While the cabins of the crane are generally well insulated from noise, they are far from entirely silent owing to other construction work nearby and noises from the crane machinery itself. The Witekio team needed to ensure that the voice recognition software could distinguish the commands from the crane operator despite the background noise, a very different environment to regular consumer-grade voice assistants, for example.

Conclusion

Voice recognition software is an attractive user control interface for a variety of connected devices, machines, and tools. While the consumer applications are many and diverse, there is also scope for such technologies to be brought to industrial environments. As Witekio’s proof of concept with the Vivoka VDK software development kit has shown, the capacity to control a crane or another large machine with voice commands is not only theoretically possible, but also real and available now. A future where clicks and taps are swapped out for simple conversation is not too far away.

More on custom voice command embedded on device, check out Vivoka webinar – embedding Cusotm Voice Commands, with Vivoka’s Voice Development Kit.